The home for prompt engineering—discover, manage, version, test, and deploy prompts in a community-driven platform

The collaboration platform for prompts

Host, share, and discover unlimited public prompts with the community or privately with teammates

Manage, version, and deploy

Organize your prompts with Git-based versioning and a simple API

AI tools for writing prompts

Best-in-class tools to help you generate and enhance prompts using AI

Build your portfolio

Share your prompts with the world, grow your reputation, and show your expertise

Evaluate prompts at scale with a few clicks

Run evals in a simple UI across your test cases

Say goodbye to spreadsheets

Easily compare outputs side-by-side when tweaking prompts

Test across different models

Compare outputs side-by-side

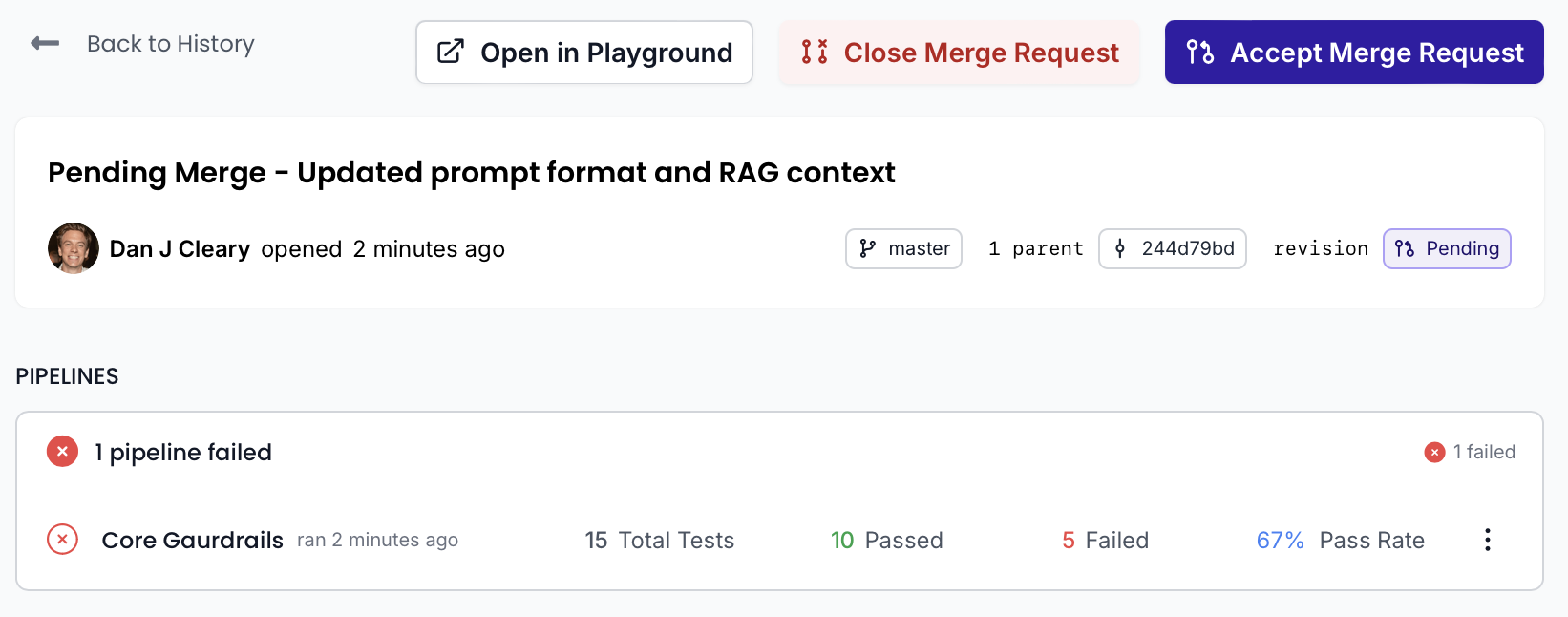

Ship prompts with guardrails

Run pipelines of evaluators on every commit or merge to keep secret leaks, profanity, and regressions out of prod.

As a medical doctor and prompt engineering lead, I needed a prompt management tool that would be simple and powerful for my team. PromptHub is the best in the market when it comes to this balance.

Collaborate with thousands of AI builders to discover, manage, and improve prompts—free to get started.