This guide is for anyone using the LangChain framework in their product.

Integrating PromptHub with LangChain allows you to easily manage, version, and retrieve prompts while maintaining flexibility in how you structure and execute workflows in your code.

In this guide, we’ll walk through how to:

- Retrieve prompts from your PromptHub library using the Get a Project’s Head (

/head) endpoint - Dynamically populate variables with data from your application

- Update variable syntax and structure to ensure compatibility with LangChain.

- Use LangChain to execute and process the prompts within your workflows

Before we jump in, here are a few resources you may want to open in separate tabs:

- PromptHub: PromptHub documentation

- LangChain: LangChain documentation

Setup

Here’s what you’ll need to integrate PromptHub with LangChain and test locally:

Accounts and Credentials

- A PromptHub account: Create a free account here if you don’t have one yet

- PromptHub API Key: Generate your API key in the account settings

- LangChain installed: Make sure you've installed LangChain locally

Tools

- Python

- Required Python Libraries:

requests langchain langchain-anthropic

- LangChain Imports:You’ll use the following imports in your script:

from langchain.prompts import ChatPromptTemplate

from langchain.schema import SystemMessage, HumanMessage, AIMessage

from langchain_anthropic import ChatAnthropic

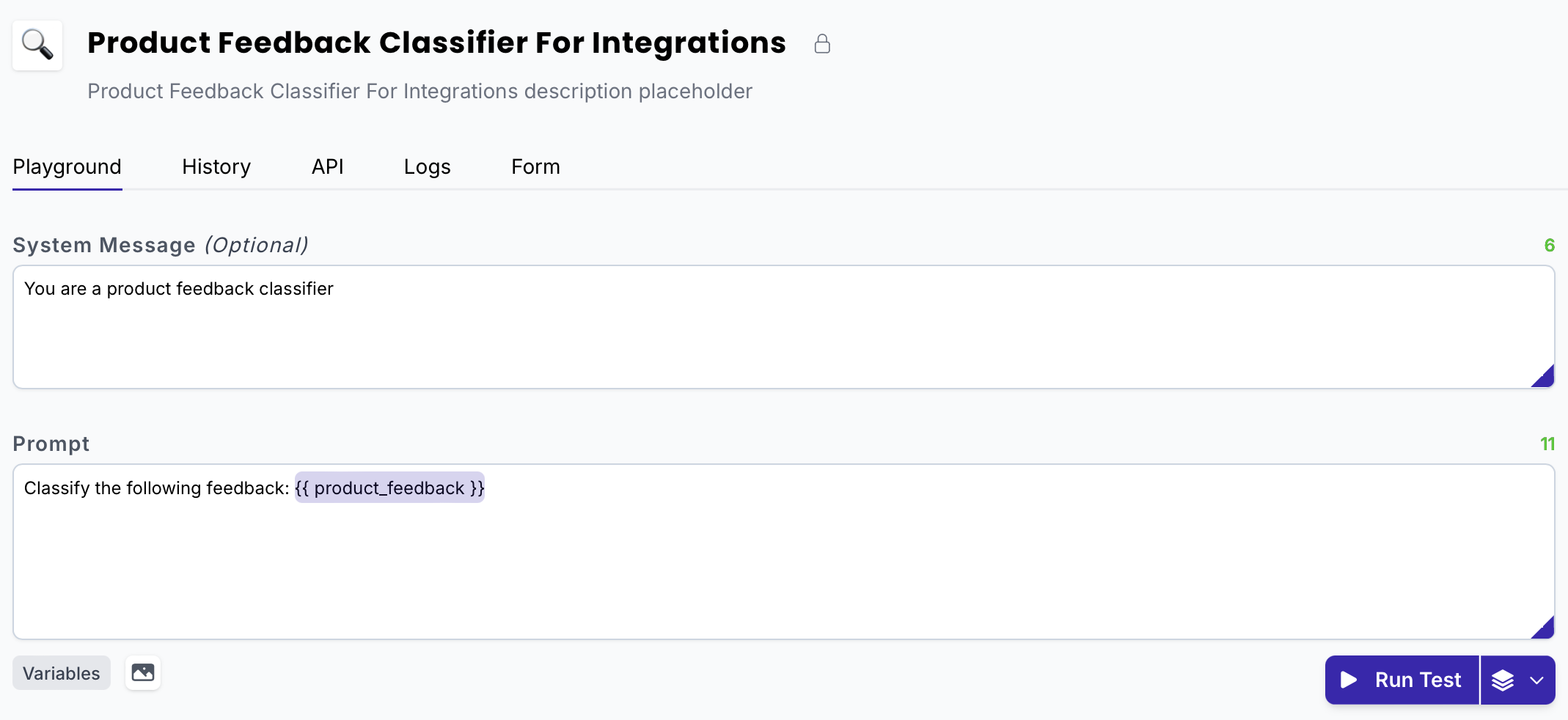

Project setup in PromptHub

- Create a project in PromptHub and commit an initial version. You can use our prompt generator to get a solid prompt, quickly.

- For this guide, we’ll use the following example prompt:

System message

You are a product feedback classifier

Prompt

Classify the following feedback: {{product_feedback}}

Retrieving a prompt from your PromptHub library

To programmatically retrieve a prompt from your PromptHub library, you’ll need to make a request to the /head endpoint.

The /head endpoint returns the most recent version of your prompt. We'll pass this through to LangChain.

Making the request

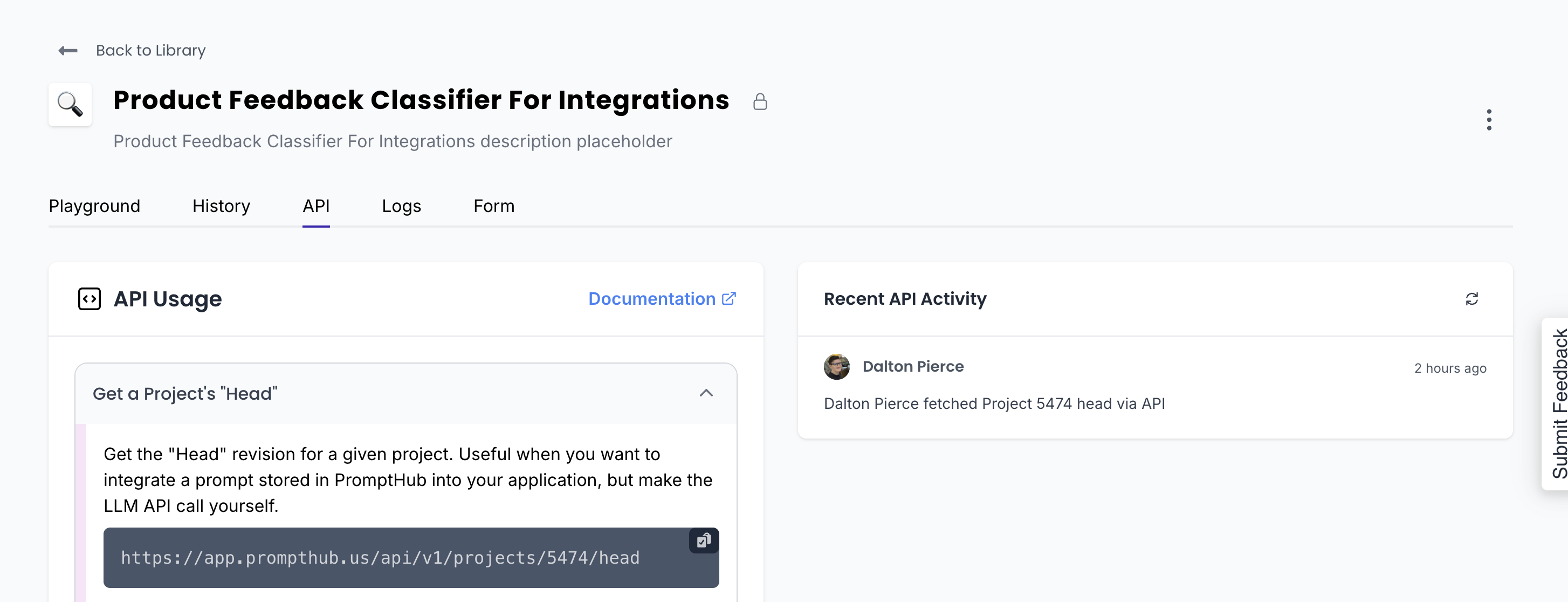

Retrieving a prompt is easy—all you need is the project ID. You can find the project ID in the project URL or under the API tab when viewing a project.

The response from this /head endpoint includes a few important items:

formatted_request: A structured object ready to be sent to a model ( Claude 3.5 Haiku, Claude 3 Opus, GPT-4o, etc). We format it based on whichever model you're using in PromptHub, so all you need to do is send it your LLM provider. Here are a few of the more important pieces of data in the object:model: The model set when committing a prompt in the PromptHub platformsystem: The top-level field for system instructions, separate from themessagesarray (per Anthropic's request format).messages: The array that has the prompt itself, including placeholders for variables.- Parameters like

max_tokens,temperature, and others.

- Variables: A dictionary of variables and their values set in PromptHub. You’ll need to inject your data into these variables.

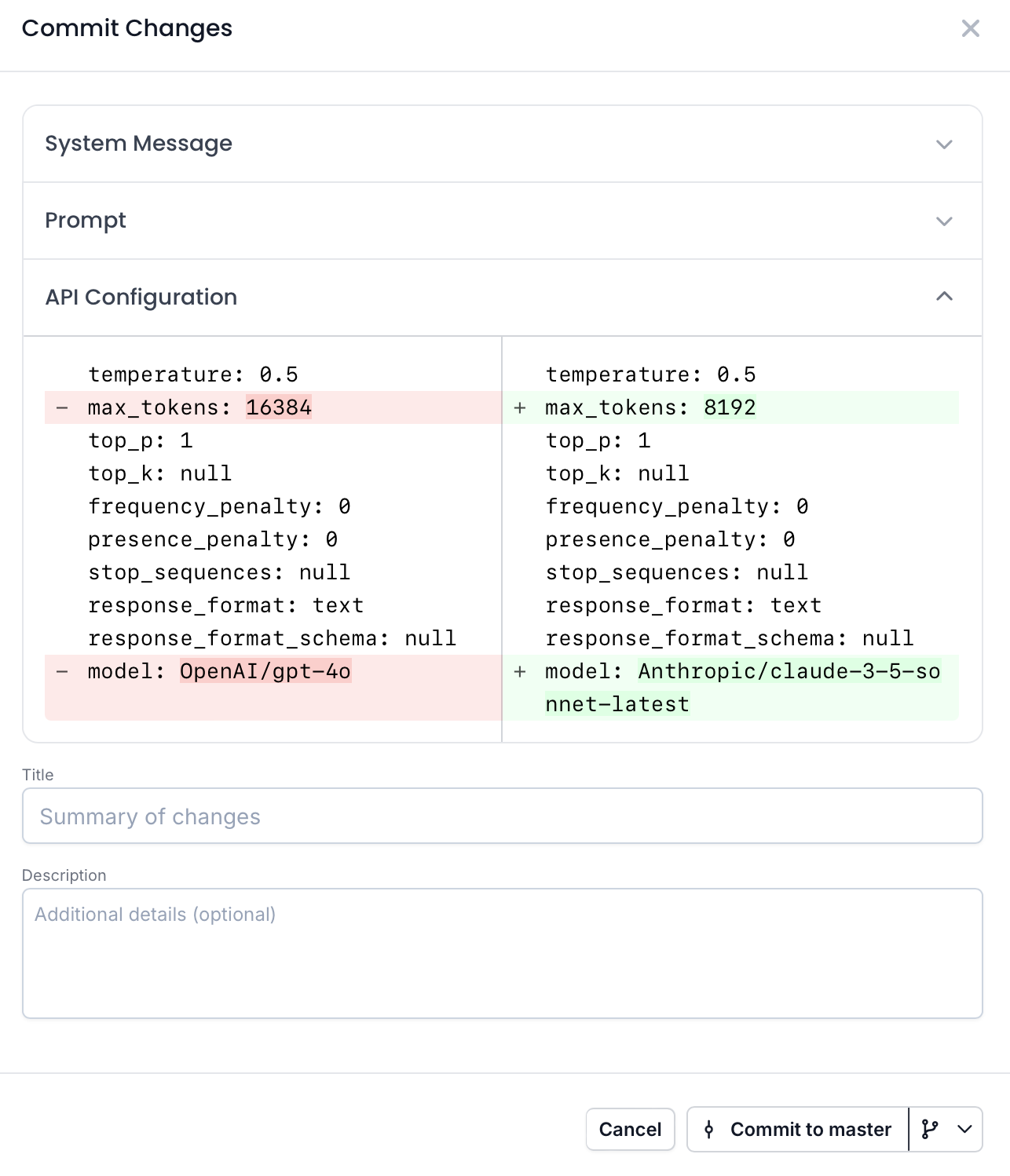

PromptHub versions both your prompt and the model configuration. So any updates made to the model or parameters will automatically reflect in your code.

For example, in the commit modal below, you can see that I am updating the API configuration from GPT-4o (used when creating our OpenAI integration guide) to Sonnet-3.5-latest. This automatically updates the model in the formatted_request.

Here’s an example response from the /head endpoint for our feedback classifier:

{

"data": {

"id": 11944,

"project_id": 5474,

"user_id": 5,

"branch_id": 8488,

"provider": "Anthropic",

"model": "claude-3-5-sonnet-latest",

"prompt": "Classify the following feedback: {{ product_feedback }}",

"system_message": "You are a product feedback classifier",

"formatted_request": {

"model": "claude-3-5-sonnet-latest",

"system": "You are a product feedback classifier",

"messages": [

{

"role": "user",

"content": "Classify the following feedback: {{ product_feedback }}"

}

],

"max_tokens": 8192,

"temperature": 0.5,

"top_p": 1,

"stream": false

},

"hash": "d72de39c",

"commit_title": "Updated to Claude 3.5 Sonnet",

"commit_description": null,

"prompt_tokens": 12,

"system_message_tokens": 7,

"created_at": "2025-01-07T19:51:14+00:00",

"variables": {

"system_message": [],

"prompt": {

"product_feedback": "the product is great!"

}

},

"project": {

"id": 5474,

"type": "completion",

"name": "Product Feedback Classifier For Integrations",

"description": "Product Feedback Classifier For Integrations description placeholder",

"groups": []

},

"branch": {

"id": 8488,

"name": "master",

"protected": true

},

"configuration": {

"id": 1240,

"max_tokens": 8192,

"temperature": 0.5,

"top_p": 1,

"top_k": null,

"presence_penalty": 0,

"frequency_penalty": 0,

"stop_sequences": null

},

"media": [],

"tools": []

},

"status": "OK",

"code": 200

}

Here’s a more focused version that has the data we’ll use in later steps.

{

"data": {

"formatted_request": {

"model": "claude-3-5-sonnet-latest",

"system": "You are a product feedback classifier",

"messages": [

{

"role": "user",

"content": "Classify the following feedback: {{ product_feedback }}"

}

],

"max_tokens": 8192,

"temperature": 0.5,

"top_p": 1,

"stream": false

},

"variables": {

"product_feedback": "the product is great!"

}

},

"status": "OK",

"code": 200

}

Now let’s write a script to hit the /head endpoint and retrieve the latest prompt and configuration:

import requests

def fetch_prompt_from_prompthub(api_key, project_id):

url = f'https://app.prompthub.us/api/v1/projects/{project_id}/head'

headers = {

'Authorization': f'Bearer {api_key}',

'Accept': 'application/json'

}

response = requests.get(url, headers=headers)

if response.status_code == 200:

return response.json()['data']

else:

raise Exception(f"Failed to fetch prompt: {response.status_code} - {response.text}")

Using the branch query parameter (optional)

In PromptHub, you can create multiple branches for your prompts.

You can retrieve the latest prompt from a branch by passing the branch parameter in the API request. This allows you to deploy prompts separately across different environments.

Here’s how you would retrieve a prompt from a staging branch:

url = f'<https://app.prompthub.us/api/v1/projects/{project_id}/head?branch=staging>'

Here is a 2-min demo video for more information on creating branches and managing prompt commits in PromptHub.

The next step is to replace PromptHub-style placeholders ({{variable}}) with LangChain-compatible placeholders ({variable}) and create a ChatPromptTemplate.

Injecting data into variables and creating the ChatPromptTemplate in LangChain

As a reminder, here is what the formatted_request object looks like:

{

"data": {

"formatted_request": {

"model": "claude-3-5-sonnet-latest",

"system": "You are a product feedback classifier",

"messages": [

{

"role": "user",

"content": "Classify the following feedback: {{ product_feedback }}"

}

],

"max_tokens": 8192,

"temperature": 0.5,

"top_p": 1,

"stream": false

},

"variables": {

"product_feedback": "the product is great!"

}

},

"status": "OK",

"code": 200

}

Note: Anthropic structures the system message outside the messages array, unlike OpenAI.

Here is a script that will look for variables in both the system message and the prompt and update their syntax, as well as create the ChatPromptTemplate, which is what will be sent to the LLM for processing.

import re

from langchain.prompts import ChatPromptTemplate

def create_prompt_templates(data):

system_message = data['formatted_request'].get('system', "").strip()

user_message = data['formatted_request']['messages'][0]['content']

# Replace PromptHub-style placeholders with LangChain-compatible placeholders

cleaned_user_message = re.sub(r"\{\{\s*(\w+)\s*\}\}", r"{\1}", user_message)

# Create ChatPromptTemplate

prompt = ChatPromptTemplate.from_messages([

("system", system_message),

("human", cleaned_user_message)

])

return prompt

The script:

- Scans both the system message and user prompt for variables in double curly braces syntax (`{{variable}}`).

- Replaces placeholder variables with LangChain-compatible syntax (`{variable}`).

- Creates a

ChatPromptTemplatethat combines the system and user messages, ready for processing

After running this script, you'll have a fully formatted ChatPromptTemplate with correct variable syntax, ready to be used in your LangChain workflow and sent to an LLM.

Calling the model using LangChain

Now that we’ve retrieved the prompt created the ChatPromptTemplate, all we need to do is send the request to Anthropic, through LangChain.

Here’s how you can call the model and pull data, like the model and parameters you set in PromptHub, from the formatted_request object.

# Step 3: Call the model using LangChain with dynamic parameters

def invoke_chain(prompt, inputs, formatted_request, anthropic_api_key):

# Initialize the ChatAnthropic model with parameters from PromptHub

chat = ChatAnthropic(

model_name=formatted_request['model'], # Retrieve model dynamically

temperature=formatted_request.get('temperature', 0.5), # Retrieve temperature dynamically

max_tokens=formatted_request.get('max_tokens', 512), # Retrieve max_tokens dynamically

anthropic_api_key=anthropic_api_key

)

# Combine the prompt with the model and invoke

chain = prompt | chat

response = chain.invoke(inputs)

return response

Note that we are pulling the model and parameters from the formatted_request object.

Bringing it all together

Here’s a script that combines all the steps above: Retrieves the prompt from PromptHub, updates variable syntax and structure, and sends the prompt to Anthropic using LangChain

import requests

import re

from langchain.prompts import ChatPromptTemplate

from langchain_anthropic import ChatAnthropic

# Step 1: Fetch the prompt and model configuration from PromptHub

def fetch_prompt_from_prompthub(api_key, project_id):

url = f'https://app.prompthub.us/api/v1/projects/{project_id}/head'

headers = {

'Authorization': f'Bearer {api_key}',

'Accept': 'application/json'

}

response = requests.get(url, headers=headers)

if response.status_code == 200:

return response.json()['data']

else:

raise Exception(f"Failed to fetch prompt: {response.status_code} - {response.text}")

# Step 2: Replace placeholders and create prompt template

def create_prompt_templates(data):

system_message = data['formatted_request'].get('system', "").strip()

user_message = data['formatted_request']['messages'][0]['content']

# Replace PromptHub-style placeholders {{ variable }} with LangChain-compatible {variable}

cleaned_user_message = re.sub(r"\{\{\s*(\w+)\s*\}\}", r"{\1}", user_message)

# Create ChatPromptTemplate

prompt = ChatPromptTemplate.from_messages([

("system", system_message),

("human", cleaned_user_message)

])

return prompt

# Step 3: Call the model using LangChain with dynamic parameters

def invoke_chain(prompt, inputs, formatted_request, anthropic_api_key):

# Initialize the ChatAnthropic model with parameters from PromptHub

chat = ChatAnthropic(

model_name=formatted_request['model'], # Retrieve model dynamically

temperature=formatted_request.get('temperature', 0.5), # Retrieve temperature dynamically

max_tokens=formatted_request.get('max_tokens', 512), # Retrieve max_tokens dynamically

anthropic_api_key=anthropic_api_key

)

# Combine the prompt with the model and invoke

chain = prompt | chat

response = chain.invoke(inputs)

return response

# Main Script

if __name__ == "__main__":

# Define your API keys directly here

PROMPTHUB_API_KEY = "your_prompthub_api_key" # Replace with your PromptHub API key

ANTHROPIC_API_KEY = "your_anthropic_api_key" # Replace with your Anthropic API key

PROJECT_ID = "your_project_id" # Replace with your PromptHub project ID

inputs = {"product_feedback": "The product is great!"} # Example input

try:

# Step 1: Fetch data from PromptHub

data = fetch_prompt_from_prompthub(PROMPTHUB_API_KEY, PROJECT_ID)

print("Fetched Data from PromptHub:")

print(data)

# Step 2: Create prompt templates

prompt = create_prompt_templates(data)

# Step 3: Invoke the model with dynamic parameters

response = invoke_chain(prompt, inputs, data['formatted_request'], ANTHROPIC_API_KEY)

print("Response from LLM:")

print(response.content)

except Exception as e:

print(f"Error: {e}")

By using the /head endpoint you can:

- Implement once, update automatically: Set up your integration once, and any updates to prompts, models, or parameters will instantly reflect in your LangChain workflow

- Enable non-technical team members: Non-technical team members can easily test and make prompt changes and have them reflect instantly. No coding needed.

- Decouple prompts from code: Make prompt updates, adjust model configurations, or tweak parameters without needing to redeploy your code

- Establish clear version control for prompts: Always retrieve the latest version of a prompt

- Deploy to different environments: Use the

branchparameter to test prompts in staging before deploying them to production.

Wrapping up

If you’re using LangChain and want to simplify prompt management and testing for your entire team, integrating with PromptHub is the way to go. With a one-time setup, PromptHub streamlines prompt management, versioning, and updates, making it easy for both technical and non-technical team members to collaborate and optimize LLM workflows without touching the code.